AI Agents will not make humans obsolete, but they will make them more effective

The hype around Agentic AI rivals that around Generative AI. Those AI agents are going to automate entire departments, it sounds. They will soon take over all kinds of actions from us, completely autonomous and much better and safer than we could do ourselves. But is that really the case? How useful is Agentic AI really? Can we do something with it within the public sector?

The answer: yes, technology is going to help us enormously, if we use it wisely.

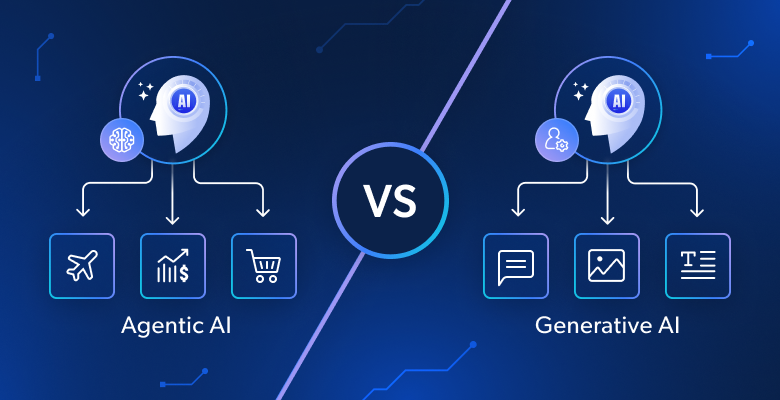

But let's start at the beginning. What is an AI agent? Imagine a large digital library with billions of books, articles, and conversations. A large language model (LLM) like ChatGPT is like a super-smart librarian who browses through all that information at lightning speed and answers your questions based on it. It is good at understanding and generating text, but it does so reactively: you ask a question, it gives an answer.

An AI agent is like that same smart librarian, but with access to all kinds of useful tools and systems. Instead of just responding to questions, an AI agent can take initiative on its own, perform actions, retrieve data, generate reports, learn from previous experiences, and collaborate with other systems.

The promise of AI agents is that they will be completely autonomous, that they will work proactively, make decisions, and be able to handle organizational processes end-to-end.

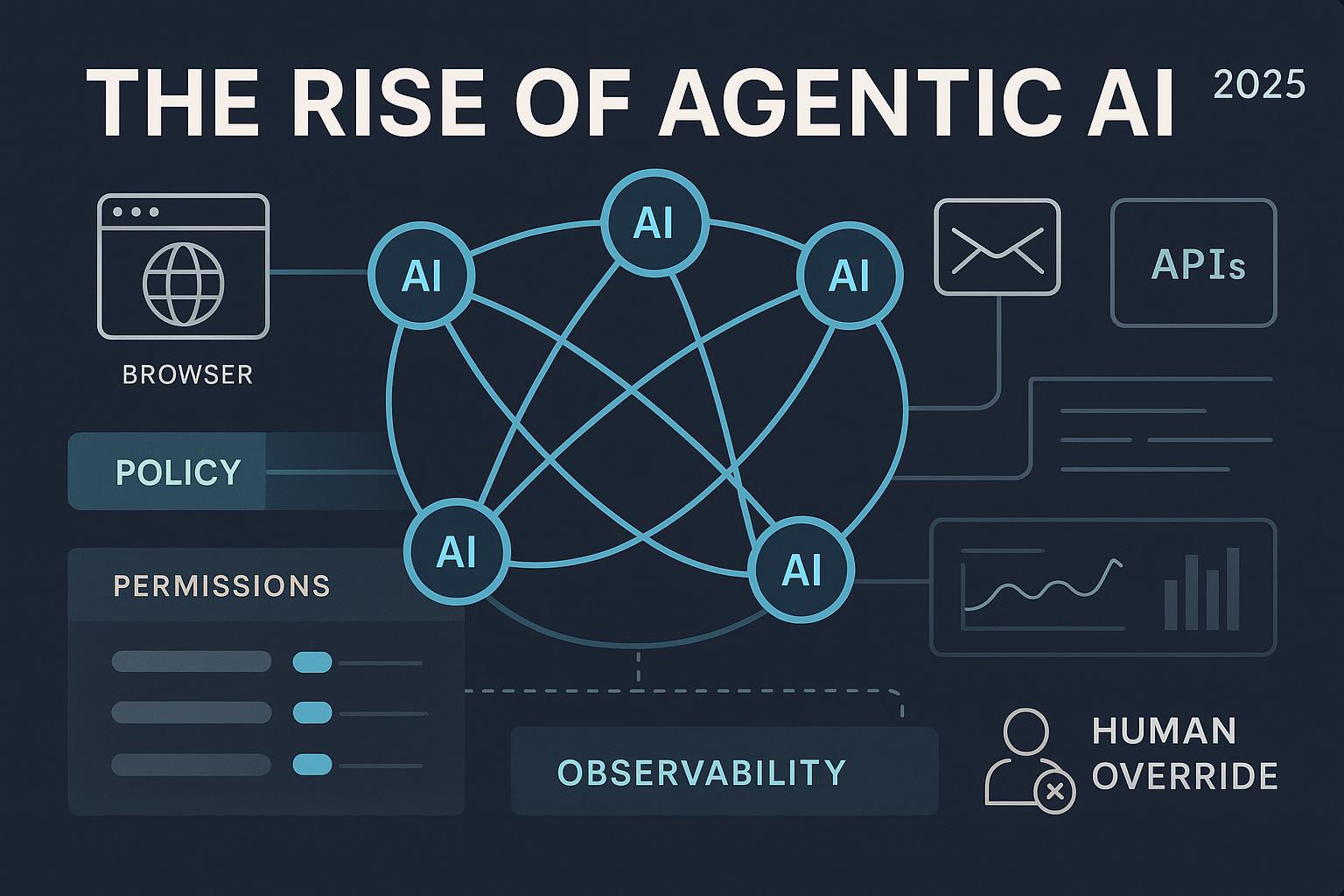

Our recent report The Rise of Agentic AI shows that things are not going that fast. Most AI agents currently operate at a low level of autonomy. Yes, they automate tasks, but predefined tasks by humans, with a predefined scope.

In the coming years, this degree of autonomy will increase, but only slowly. Even with advanced autonomy, people will keep a firm finger in the pie. AI decision-making will become more common, but organizations won’t be saying goodbye to human checks and balances anytime soon. The government is not being taken over by a super-intelligence that handles everything. Rather, we foresee that small, defined agents will be implemented, capable of a great deal when used together.

These will often be repetitive tasks. In dealing with citizens, and also in internal and external security, the government cannot afford to make mistakes. Human actions inevitably cause errors, particularly in repetitive work. And no matter how innocent or unintentional, such mistakes can have major consequences.

If set up in the right way, an AI agent does not make those mistakes, even when performing repetitive tasks. This allows a government to cover potentially large risks very effectively.

AI agents have another advantage: if you successfully automate repetitive tasks, you free up people in the meantime. They can then focus on tasks with greater added value, for example, improving the services the government provides to citizens.

AI agents are bringing about a fundamental shift in the management and execution of business processes. They are no longer simple tools but more or less full-fledged team members who can handle processes with less human intervention.

However, if you don’t understand how your processes work, it’s very difficult to deploy agents effectively and you won’t be able to use their capabilities to the fullest. A certain degree of standardization of processes is also desirable, since stable and standardized processes are easier to automate. In many cases, this will require rethinking or even redesigning workflows. Think before you start.

Our report shows that everyone expects a lot from Agentic AI, but at the same time, there is also a lot of trepidation. This applies to applications in the private sector, but doubly so in the public sector. There are concerns about algorithmic bias and the lack of transparency, AI is often seen as a “black box,” whose input and output cannot be traced.

Moreover, there is growing concern that AI appears to be rapidly taking over human work, threatening employment. This lack of trust is a major point of attention, especially in the public sector.

The application of Agentic AI must therefore be explainable and traceable in all cases, with great attention to privacy and with robust guardrails in the form of human monitoring and approval.

So what is the conclusion? Agentic AI has the potential to improve the existence, well-being, and safety of citizens. But that doesn’t happen overnight. The scope of application of AI agents must be well defined and closely monitored. The underlying processes must be properly mapped out and, where necessary, standardized and rationalized.

Attention must be paid to the trust component. And finally, we must never forget that working for the public good must ultimately always remain the work of people.

Joost Carpaij is Head of (Gen)AI, Data Science and Analytics and is responsible for the further development of this field within Capgemini. He is mainly involved with partners in the public security domain.

𝘉𝘺 𝘑𝘰𝘰𝘴𝘵 𝘊𝘢𝘳𝘱𝘢𝘪𝘫, 𝘏𝘦𝘢𝘥 𝘰𝘧 (𝘎𝘦𝘯)𝘈𝘐, 𝘋𝘢𝘵𝘢 𝘚𝘤𝘪𝘦𝘯𝘤𝘦 𝘢𝘯𝘥 𝘈𝘯𝘢𝘭𝘺𝘵𝘪𝘤𝘴 𝘢𝘵 𝘊𝘢𝘱𝘨𝘦𝘮𝘪𝘯𝘪 - 𝘗𝘶𝘣𝘭𝘪𝘴𝘩𝘦𝘥 𝘣𝘺 𝘪𝘉𝘦𝘴𝘵𝘶𝘶𝘳.𝘯𝘭, 𝘖𝘤𝘵𝘰𝘣𝘦𝘳 30, 2025